In today’s rapidly evolving world of artificial intelligence (AI), the demand for high-quality, efficient code has never been more critical. AI code verifiers play a pivotal role in ensuring the correctness, efficiency, and scalability of software systems. But how do we know if these verifiers are performing optimally? Enter AI code verifier performance metrics analysis – an essential process that helps evaluate the accuracy and effectiveness of AI-driven verification systems. This analysis ensures that AI verifiers maintain high standards in both functionality and efficiency.

In this article, we will explore the performance metrics used to evaluate AI code verifiers, why they matter, and how you can assess and optimize these metrics to build reliable software systems. Whether you’re an AI enthusiast, developer, or researcher, this guide will provide valuable insights into the world of AI code verification.

What is AI Code Verification?

AI code verification refers to the use of artificial intelligence to automate the process of checking software code for errors, bugs, or inefficiencies. By leveraging machine learning and natural language processing, AI systems can help developers quickly identify issues within their code, reducing the time spent on manual debugging and improving overall code quality.

AI-powered code verifiers can evaluate various aspects of the code, including correctness, security, performance, and adherence to coding standards. With the growing complexity of modern software systems, relying on AI for code verification is becoming a necessity in ensuring faster and more accurate software development.

The Importance of Performance Metrics in AI Code Verification

Performance metrics are essential tools that help measure the efficiency and effectiveness of an AI code verifier. These metrics provide developers with insights into how well the AI verifier is performing in terms of accuracy, speed, and reliability. By analyzing these metrics, developers can make informed decisions about how to improve their AI verification systems.

Some key reasons why performance metrics are crucial include:

-

Accuracy: Performance metrics help assess the correctness of the AI’s output, ensuring that the verifier accurately identifies code issues.

-

Efficiency: AI verifiers should be able to process code quickly without consuming excessive computational resources. Performance metrics measure the speed and resource usage of these systems.

-

Reliability: A reliable AI code verifier can identify issues consistently over time. Performance metrics track the stability and consistency of the verifier.

-

User Trust: Performance metrics help build trust with users by ensuring that the AI system is dependable and effective.

Key Performance Metrics for AI Code Verifiers

1. Accuracy

Accuracy is perhaps the most fundamental metric in AI code verification. It measures how well the AI verifier detects errors, bugs, or vulnerabilities in the code. A higher accuracy means the AI is more reliable in identifying actual problems and avoiding false positives (incorrectly flagged issues).

Real-World Example:

Consider an AI verifier used in an e-commerce website’s backend system. The verifier might detect issues like inefficient database queries or unoptimized code. A high accuracy rate would mean fewer false positives (i.e., it won’t incorrectly flag functioning code) and fewer false negatives (i.e., it won’t miss critical bugs).

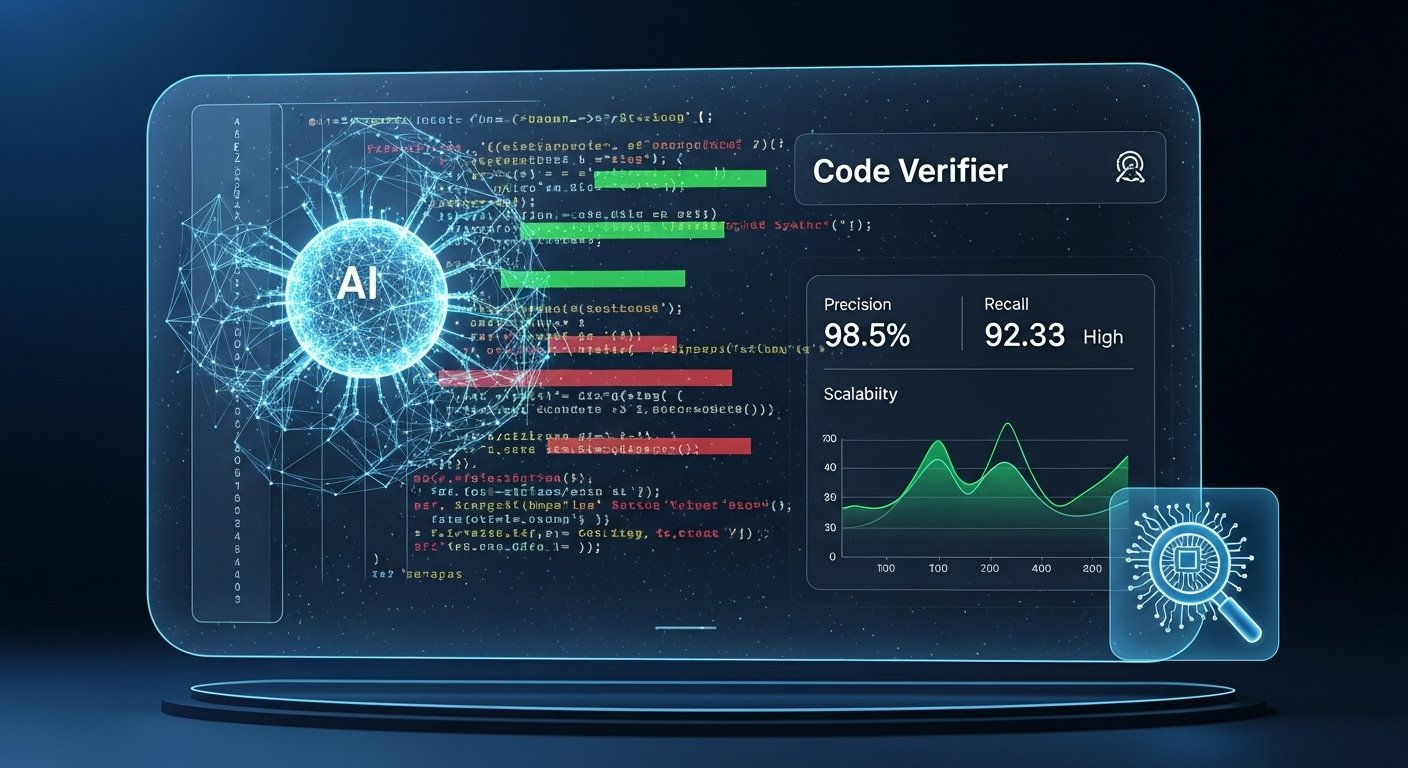

2. Precision and Recall

Precision and recall are two additional metrics that complement accuracy in AI code verification.

-

Precision measures how many of the identified issues are actual problems (i.e., the proportion of true positives among all flagged issues).

-

Recall measures how many actual problems the AI system successfully identifies (i.e., the proportion of true positives among all actual problems).

Example:

Imagine a scenario where the AI verifier flags 100 issues in the code. Out of those, 90 are real problems (true positives), and 10 are false positives. Precision in this case would be 90% (90/100). If there were 100 real issues in the code, and the AI identified 90 of them, the recall would also be 90%.

3. Speed and Latency

Speed and latency are essential performance metrics for AI code verifiers, especially in large software projects. The speed of a code verifier refers to how quickly it processes the code and identifies potential errors, while latency refers to the delay between code submission and feedback.

AI verifiers that perform quickly help speed up the development process, allowing developers to receive immediate feedback and fix issues before proceeding to the next phase of development.

4. Computational Efficiency

AI-powered code verifiers can be resource-intensive, especially when dealing with large codebases. Computational efficiency measures how well the verifier utilizes system resources like CPU, memory, and storage while performing its tasks.

A verifier that is computationally efficient will consume fewer resources, making it suitable for deployment in production environments where performance and scalability are crucial.

5. False Positives and False Negatives

In any code verification system, there are bound to be false positives and false negatives:

-

False Positives: When the verifier incorrectly flags correct code as erroneous.

-

False Negatives: When the verifier misses actual errors in the code.

Managing the balance between false positives and false negatives is crucial. A high rate of false positives can overwhelm developers, leading to wasted time spent investigating non-issues, while a high rate of false negatives can leave critical bugs undetected.

6. Scalability

Scalability measures the AI code verifier’s ability to handle increasing amounts of code or complexity without significant drops in performance. As software systems grow, it is essential for the verifier to scale accordingly, ensuring consistent quality checks as the project expands.

7. Robustness and Stability

AI systems should be robust and stable, meaning they can handle unexpected inputs, edge cases, or changes in code structure without crashing or producing unreliable results. Stability ensures that the AI verifier performs consistently over time.

Real-World Applications of AI Code Verifiers

AI-powered code verifiers have found widespread use across various industries, including:

-

Software Development: Automated code review tools like DeepCode, SonarQube, and Codex use AI to detect bugs, vulnerabilities, and performance issues in the code.

-

Security: AI verifiers are used to identify security vulnerabilities in code, such as SQL injection flaws or cross-site scripting (XSS) vulnerabilities.

-

Continuous Integration and Deployment (CI/CD): In modern CI/CD pipelines, AI verifiers help automate the process of code verification during software updates and deployments.

Example: AI in Open-Source Projects

In open-source projects, where contributors might come from all over the world, AI verifiers help ensure code quality by automatically reviewing pull requests and suggesting improvements or flagging errors. GitHub’s Copilot, for instance, uses AI to help developers write code more efficiently by providing code suggestions and detecting errors in real time.

How to Improve AI Code Verifier Performance Metrics

Improving AI code verifier performance metrics is an ongoing process that requires fine-tuning the AI model, optimizing computational resources, and updating the system based on new insights. Here are some strategies to enhance performance:

1. Training with Diverse Data

AI code verifiers perform best when trained on diverse and representative datasets. By exposing the system to a wide variety of coding styles, programming languages, and edge cases, the AI becomes better at identifying different types of errors across multiple scenarios.

2. Regular Model Updates

AI models evolve over time, and it’s crucial to update them regularly with the latest bug patterns, coding standards, and best practices. This ensures the AI verifier remains effective as programming languages and development practices evolve.

3. Optimize for Speed

Speed can be optimized by choosing efficient algorithms and techniques, such as incremental analysis or parallel processing, to quickly process large codebases.

4. Fine-Tune for Precision

Using techniques like active learning, where the AI system actively seeks out examples it struggles with, can help fine-tune the verifier for precision. It reduces false positives and improves the accuracy of identified issues.

Conclusion

AI code verifier performance metrics analysis is vital for ensuring that AI systems maintain high standards of accuracy, efficiency, and reliability. By measuring key performance indicators like accuracy, precision, recall, speed, and scalability, developers can create more effective AI-driven verification systems that enhance the quality of software code.

As AI continues to play an increasingly important role in software development, understanding and optimizing these metrics will be crucial in maintaining trust in AI-powered solutions. By regularly analyzing and improving the performance of AI code verifiers, developers can ensure they are building reliable and efficient systems that meet the demands of modern software development.

FAQs

Q1: What are the most important performance metrics for AI code verifiers?

The most important metrics include accuracy, precision, recall, speed, latency, computational efficiency, and scalability. These metrics help ensure the AI verifier is effective and efficient.

Q2: How does an AI code verifier help in software development?

AI code verifiers help identify bugs, vulnerabilities, and inefficiencies in code automatically, reducing the time developers spend on manual debugging and improving overall software quality.

Q3: Can AI code verifiers detect security vulnerabilities?

Yes, AI code verifiers can be trained to detect various security vulnerabilities, including SQL injection flaws and cross-site scripting (XSS) vulnerabilities.

Q4: How can I improve the performance of an AI code verifier?

Improving performance can be achieved by training the AI with diverse data, regularly updating the model, optimizing for speed, and fine-tuning the verifier for precision.

Leave a Reply